[ad_1]

One of the first things you discover in the world of robotics is the complexity of simple tasks. Things that appear simple to humans have potentially infinite variables that we take for granted. Robots don’t have such luxuries.

That’s precisely why much of the industry is focused on repeatable tasks in structured environments. Thankfully, the world of robotic learning has seen some game-changing breakthroughs in recent years, and the industry is on track for the creation and deployment of more adaptable systems.

Last year, Google DeepMind’s robotics team showcased Robotics Transformer — RT-1 — which trained its Everyday Robot systems to perform tasks like picking and placing and opening draws. The system was based on a database of 130,000 demonstrations, which resulted in a 97% success rate for “over 700” tasks, according to the team.

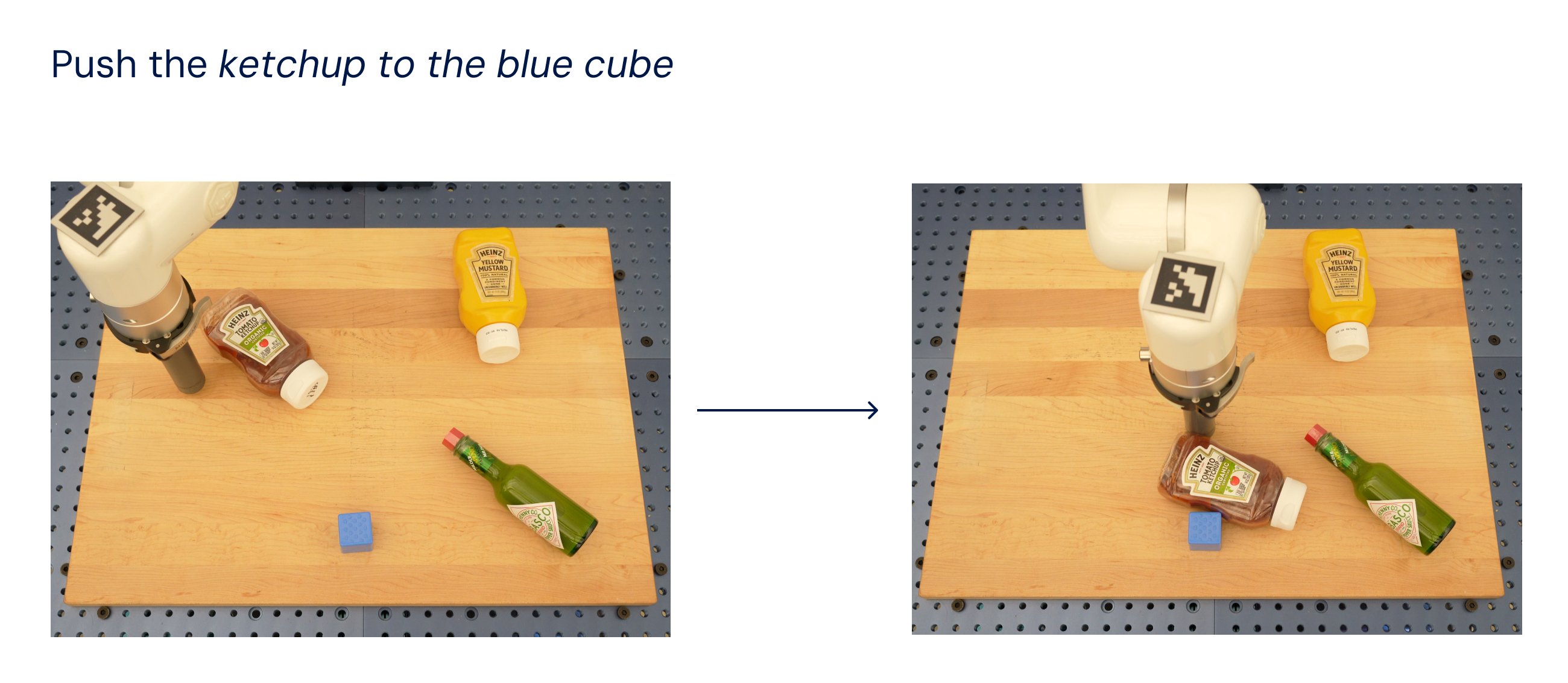

Image Credits: Google DeepMind

Today it’s taking the wraps off RT-2. In a blog post, DeepMind’s Distinguished Scientist and Head of Robotics, Vincent Vanhoucke, says the system allows robots to effectively transfer concepts learned on relatively small datasets to different scenarios.

“RT-2 shows improved generalisation capabilities and semantic and visual understanding beyond the robotic data it was exposed to,” Google explains. “This includes interpreting new commands and responding to user commands by performing rudimentary reasoning, such as reasoning about object categories or high-level descriptions.” The system effectively demonstrates a capacity to determine things like the best tool for a specific novel task based on existing contextual information.

Vanhoucke cites a scenario in which a robot is asked to throw away trash. In many models, the user has to teach the robot to identify what qualifies as trash and then train it to pick the garbage up and throw it away. It’s a level of minutia that isn’t especially scalable for systems that are expected to perform an array of different tasks.

“Because RT-2 is able to transfer knowledge from a large corpus of web data, it already has an idea of what trash is and can identify it without explicit training,” Vanhoucke writes. “It even has an idea of how to throw away the trash, even though it’s never been trained to take that action. And think about the abstract nature of trash — what was a bag of chips or a banana peel becomes trash after you eat them. RT-2 is able to make sense of that from its vision-language training data and do the job.”

The team says the efficacy rate on executing new tasks has improved from 32% to 62% in the jump from RT-1 to RT-2.

[ad_2]

Source link