[ad_1]

You’ll have to forgive me, I spent most of the morning thinking and writing about VR. In the lead-up to Apple’s expected headset announcement at WWDC next week, I did a one-person crash course into the world of extended reality, from its birth in the laboratories in the ’50s and ’60s to the current crop of headsets. It’s at once an interesting and frustrating journey, and one that has had more than a few overlaps with robotics.

It could be the fact that I’m fresh off a week at Automate, but I spent much of the research process comparing and contrasting the two fields. There’s a surprising bit of overlap. Like robotics, science fiction predated real-world VR by several decades. As with automatons, the precise origin of artificially created virtual worlds is a tough one to pin down, but Stanley Weinbaum’s 1935 short story “Pygmalion’s Spectacles” is often cited as the fictional origin of the VR headset four and a half decades before “virtual reality” was popularized as a term.

From Weinbaum’s text:

“Listen! I’m Albert Ludwig—Professor Ludwig.” As Dan was silent, he continued, “It means nothing to you, eh? But listen—a movie that gives one sight and sound. Suppose now I add taste, smell, even touch, if your interest is taken by the story. Suppose I make it so that you are in the story, you speak to the shadows, and the shadows reply, and instead of being on a screen, the story is all about you, and you are in it. Would that be to make real a dream?”

“How the devil could you do that?”

“How? How? But simply! First my liquid positive, then my magic spectacles. I photograph the story in a liquid with light-sensitive chromates. I build up a complex solution—do you see? I add taste chemically and sound electrically. And when the story is recorded, then I put the solution in my spectacle—my movie projector.”

Another key parallel is the import of university research, government agencies and military funding. MIT’s Lincoln Laboratory played a key role with the introduction of “The Sword of Damocles” head-tracking stereoscopic headset in 1968. NASA picked up the ball the following decade in hopes of creating systems that could simulate space missions here on Earth. Various militaries, meanwhile, develop heads-up displays (HUDs) that are in many way precursors to modern AR.

As for the differences, well, the biggest is probably that robotics has played a fundamental role in our world for decades now. Entertainment is a tiny corner of a world that has transformed the way work is done in several industries, from manufacturing and warehouses to agriculture and surgery. I’ve heard a lot of people repeat the notion that “robotics is in its infancy.” While the sentiment is clearly true, it’s also important to acknowledge just how far things have already come.

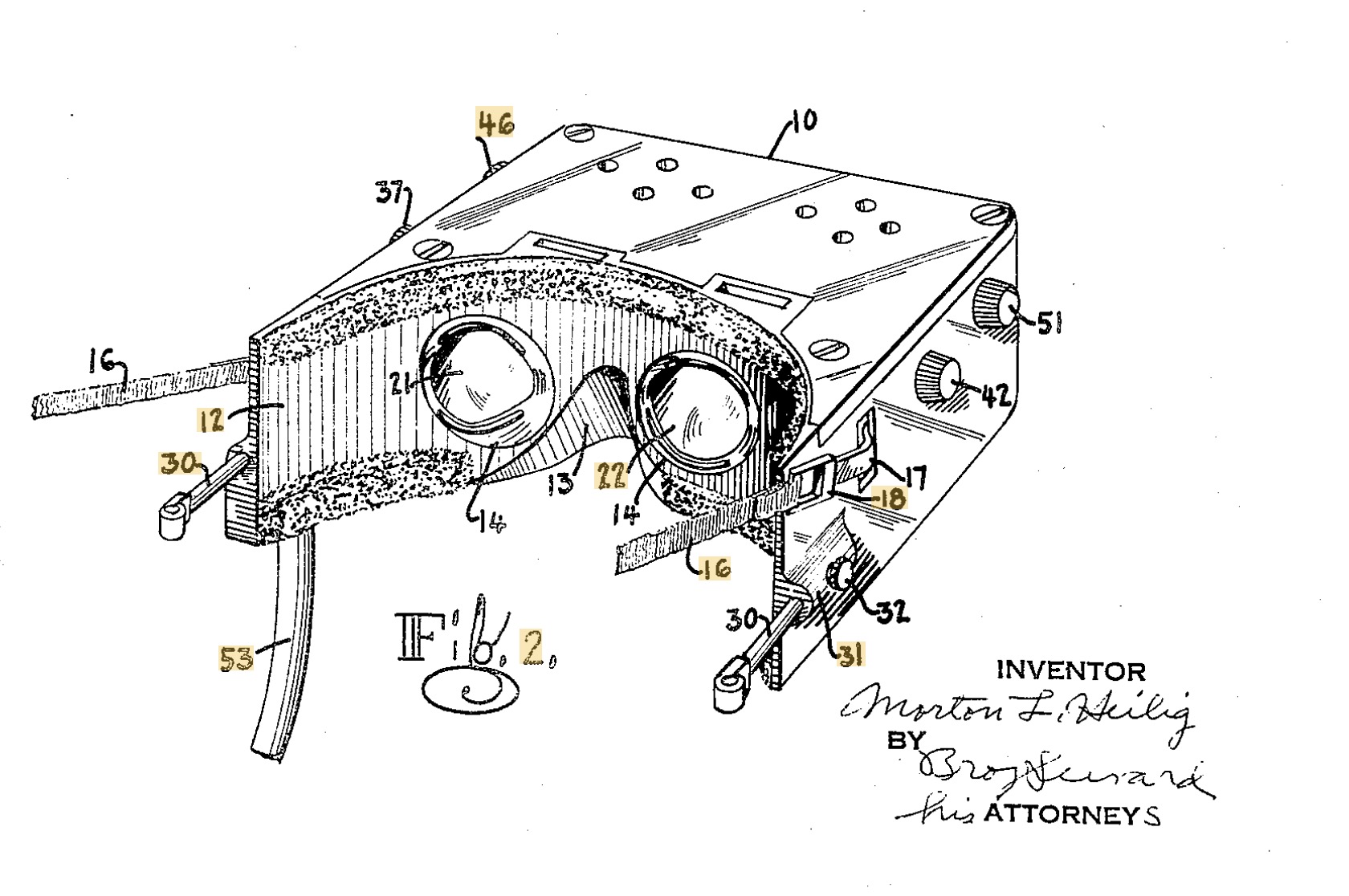

Sketch from Morton Helig’s 1962 patent. Image Credits: Morton Helig

It’s a stark contrast to the decades of unfulfilled promise for VR. There have been flashes of brilliance, and it’s something that people feel they want (I, for one, would love a great consumer headset), but we’re all waiting with bated breath for the killer consumer and enterprise applications to propel the industry forward. I know I’m excited to find out what Apple’s been cooking up for the last eight or so years. Certainly the underlying technology has improved by leaps and bounds, which — much like robotics — is indebted to advances made by the smartphone industry.

Of course, I would suggest that — like VR — robotics has struggled on the consumer front. More than 20 years after the release of the first Roomba, robot vacuums remain an outlier. Conversely, the progress on the industrial side has been both steady and largely outside of most people’s day-to-day lives, which is, perhaps, why robotics hasn’t quite received the hype bubble of crypto or generative AI. Is it possible that quiet success has been counterproductive to industry PR?

In the end, this is probably for the best. Crypto has certainly been a victim of its own hype. And while the jury is still out on generative AI (which, for the record, I believe is substantially more interesting and useful than crypto), VR went through a similar experience in the ’90s. The technology hit a fever pitch during the decade but failed to live up to its own promise. The industry is still reeling from that cycle 30 years later.

Robotics also continues to play an important role in education beyond the university. It’s become a fundamental element of STEM (science, technology, engineering, and mathematics). Robots are cool, fun, exciting and therefore the perfect hook for getting kids interested in things like technology and engineering. When I was in Boston a couple of months back, I had a fascinating chat with Alexander Dale, the director of global challenges of MIT’s social impact accelerator, Solve.

He discussed a number of the interesting projects the initiative has supported over the years, but the work of Danielle Boyer really jumped out at me. A member of the Ojibwe tribe, Boyer has focused much of her young career on helping to bring the elements of STEM to the underserved members of indigenous communities through her not-for-profit STEAM Connection organization.

Image Credits: STEAM Connection

Boyer has developed low-cost robotics kits that can be provided to students free of charge (with help from corporate sponsors). Her organization has shipped more than 8,000 of her Every Kid Gets a Robot kit to date. Earlier this month, we sat down to discuss her work.

Q&A with Danielle Boyer

How did you get into this world?

I started teaching when I was 10 years old, because I saw that tech wasn’t accessible for my family and I. That was inspired by my little sister, Bree, and her interest in science and robotics. I started teaching a kindergarten class, and then when I got to high school, I was interested in joining a robotics team. It took a long time to save up to be able to do that, because it was $600. And I was the only native and one of the only girls. When I got there, I was bullied really badly. I definitely stuck out like a sore thumb.

Tell me a bit about your community.

I’m Ojibwe. I’m from the Sioux tribe, which is in the Upper Peninsula of Michigan. I grew up all over Michigan. Right now my immediate family lives in Troy, Michigan, which is pretty close to Detroit. In my community, there [are] a lot of accessibility issues. Regarding tech, for indigenous peoples in general, we are the least likely demographic to have a laptop, to have access to the internet, to see role models in tech spaces. There’s a huge barrier preventing our youth from seeing themselves in STEM careers, and then actually taking the leap to get into those spaces. It’s not even how hard it is once you get to college, or how unrepresentative it is there — it starts even before that.

Are things beginning to trend in the right direction?

I’m 22. I have not been in tech too long. I don’t even have a tech degree. For me, observing tech patterns and accessibility to tech, there’s a lot of progress, because people are more and more seeing its importance, when they may not have seen the importance in the education surrounding it before. Oftentimes, some tribes are more interested than others. Oftentimes, it’s hard. It’s a hard sell for our community members who are older to see the benefits of technology. I got a comment on Instagram yesterday, that was like, “There’s nothing more indigenous than robotics. Oh, and they were really angry.

They were complaining about technology on Instagram.

Right. To me, it doesn’t matter if [something is] modern or not. We’re modern people. We can do modern things, but there’s also a lot of area for improvement.

You said you don’t have a degree in tech.

No, I dropped out of college.

What were you studying?

I was a double major in electrical engineering and mechanical engineering.

So you were interested in and around tech.

Yeah. I have been designing robots and I have a lot of experience in specifically CAD through SolidWorks, plus electrical engineering and circuit design. All of the robots I designed myself.

Image Credits: STEAM Connection

Are you still interested in entering robotics outside of the philanthropic and teaching spaces?

I would love to go into bio robotics and soft robotics, purely from a standpoint of it being interesting and fun. I don’t think I want a degree in it. I think it would be more research or a hobby. I do think that there is a lot of work to do in the educational space, and I do think that my time is definitely well spent over here. But in terms of being a nerd, I really want to build animal-inspired robots.

Is your current work a hobby that became a career?

Not really, because I was in high school, I was on the robotics team, and then I jumped immediately into my organization. After graduating, I had founded a lot of robotics teams, and I saw a need there. I invented my first robot — Every Kid Gets a Robot — when I was 18. It’s a simple RC-style, app-controlled robot that the kids can assemble. It’s a very simple robot that costs less than $18 to make, and it goes to the kids for free. That’s what I started out with.

What kinds of robots are you currently producing?

I make quite a few robots, but I’ll stick to the two that we make the most. The first one is Every Kid Gets a Robot. That’s the robot that costs less than $18 that goes to the kids for free. The goal is to get a positive representation of STEM into the kids’ hands as affordably as possible. So, basic STEM skills: wiring, programming, assembly, using tools, learning about things like battery safety.

The next robot that we make the most of is the SkoBot. “Sko” is reservation slang for “Let’s go.” It’s a robot that speaks indigenous languages. Basically, it sits on your shoulder with a GoPro strap. It senses motion through a PIR sensor, and then it speaks indigenous languages. It’s an interactive robot kids can talk to, and it can talk back. We also have a less intelligent version that just goes based off of motion sensing to play audio tracks, depending on the internet access. The entire robot is 3D printed, except for the electronics. That’s the robot that the kids are heavily customizing with different traditional elements. We’ve distributed 8,000 free Every Kid Gets a Robot kits. We’ve made a lot more. And then for the SkoBot, we’ve sent out 150.

Image Credits: STEAM Connection

Take me through that early development process. How did you create a kit for that price point?

I did it all through Amazon at first. I was looking for the parts and things, and I was just ordering stuff and doing it, trial-and-error style. To be honest, I didn’t completely know what I was doing when I started. I have some really awesome indigenous mentors in tech, who I’ve been able to ask questions to. I didn’t have a 3D printer at that point, so I was like, “Emergency. Need this CAD printed fast. Please overnight it to me,” because I always do things last minute. And then I was like testing out electronics that didn’t fit into the chassis of the robot. It was a very chaotic starting process where I was like, hey, I want to use an ESP32 development board. I had never used one before. But I had researched a lot about it, watched a lot of YouTube videos.

What is the process like in terms of working with tribes on custom robots?

It’s an interesting conversation, because we really want it to be youth led. A lot of the time it’s us driving the youth conversation surrounding how they want to see themselves represented and what they want conveyed. For example, we work with a school whose students want to have their traditional embroidery incorporated into the robot somehow. So, how do we get that into 3D printing form? Obviously, that’s a huge conversation of how do we represent all these colors accurately. A lot of times, we have people who make regalia for the robots like beaded earrings, ribbon skirts, tutus, hats, stickers, apparel, all those things, because I really wanted the robot to be something that is very unique to indigenous students, and customizable, like Mr. Potato Head–style.

I know you said that Every Kid Gets a Robot can go out to anybody, but do you continue to prioritize indigenous communities?

Yes, I prioritize indigenous communities. They go to up to 94% indigenous communities. We have sent them to other places as well, especially if a corporate sponsor is like, “We want this, but then also this,” and we can do that. I prefer sending it to indigenous youth, because most of the kids we’ve worked with have never even built a robot and don’t know a lot about STEM in general. I want to make that more accessible and interesting. We also branched into more of what the kids are interested in. We’ve been working on comics, and also augmented reality and implementing social media platforms.

News

Image Credits: Nvidia

Nvidia hit a $1 trillion market cap Tuesday morning. There’s already been a slight correction there, but the massive bump is a big vote of confidence for a chip maker that has done a great job diversifying. Robotics has been a big part of that, through efforts like Jetson and Isaac, which are designed to prototype, iterate and help ready systems for manufacturing. This week, the company added Isaac AMR to the list. The platform is designed specifically for autonomous mobile robotic systems.

CEO Jensen Huang outlined the system during a keynote at Computex in Taipei. Says the company:

Isaac AMR is a platform to simulate, validate, deploy, optimize and manage fleets of autonomous mobile robots. It includes edge-to-cloud software services, computing and a set of reference sensors and robot hardware to accelerate development and deployment of AMRs, reducing costs and time to market.

Image Credits: Serve Robotics

Nvidia also backed Uber spinout Serve Robotics, which this week announced plans to deploy “up to” 2,000 of its last-mile delivery robots in the U.S. “We expect our rapid growth on Uber Eats to continue,” co-founder and CEO Ali Kashani told TechCrunch. “We currently have a fleet of 100 robots in Los Angeles, and we expect to operate an increasing number of them on Uber Eats as our coverage and delivery volume on Uber increases.”

They look like Minions. It’s not just me, right?

Image Credits: Google DeepMind

Fun one to round out the week: Barkour, a Google DeepMind benchmark designed to help quantify performance in a quadrupedal robot. Honestly, this feels like a story written by AI to juice SEO traffic, but the division seriously built a dog obstacle course in their labs to help create a baseline for performance.

DeepMind notes:

We believe that developing a benchmark for legged robotics is an important first step in quantifying progress toward animal-level agility. . . . Our findings demonstrate that Barkour is a challenging benchmark that can be easily customized, and that our learning-based method for solving the benchmark provides a quadruped robot with a single low-level policy that can perform a variety of agile low-level skills.

City Spotlight: Atlanta

Image Credits: Bryce Durbin (opens in a new window)

On June 7, TechCrunch is going to (virtually) be in Atlanta. We have a slate of amazing programming planned, including the mayor himself, Andre Dickens. If you are an early-stage Atlanta-based founder, apply to pitch to our panel of guest investors/judges for our live pitching competition. The winner gets a free booth at TechCrunch Disrupt this year to exhibit their company in our startup alley. Register here to tune in to the event.

Image Credits: Bryce Durbin / TechCrunch

Let Actuator be your inbox’s best friend.

Meet me at the dog run by Brian Heater originally published on TechCrunch

[ad_2]

Source link