[ad_1]

Robots made their stage debut the day after New Year’s 1921. More than half-a-century before the world caught its first glimpse of George Lucas’ droids, a small army of silvery humanoids took to the stages of the First Czechoslovak Republic. They were, for all intents and purposes, humanoids: two arms, two legs, a head — the whole shebang.

Karel Čapek’s play, R.U.R (Rossumovi Univerzální Roboti), was a hit. It was translated into dozens of languages and played across Europe and North America. The work’s lasting legacy, however, was its introduction of the word “robot.” The meaning of the term has evolved a good bit in the intervening century, as Čapek’s robots were more organic than machine.

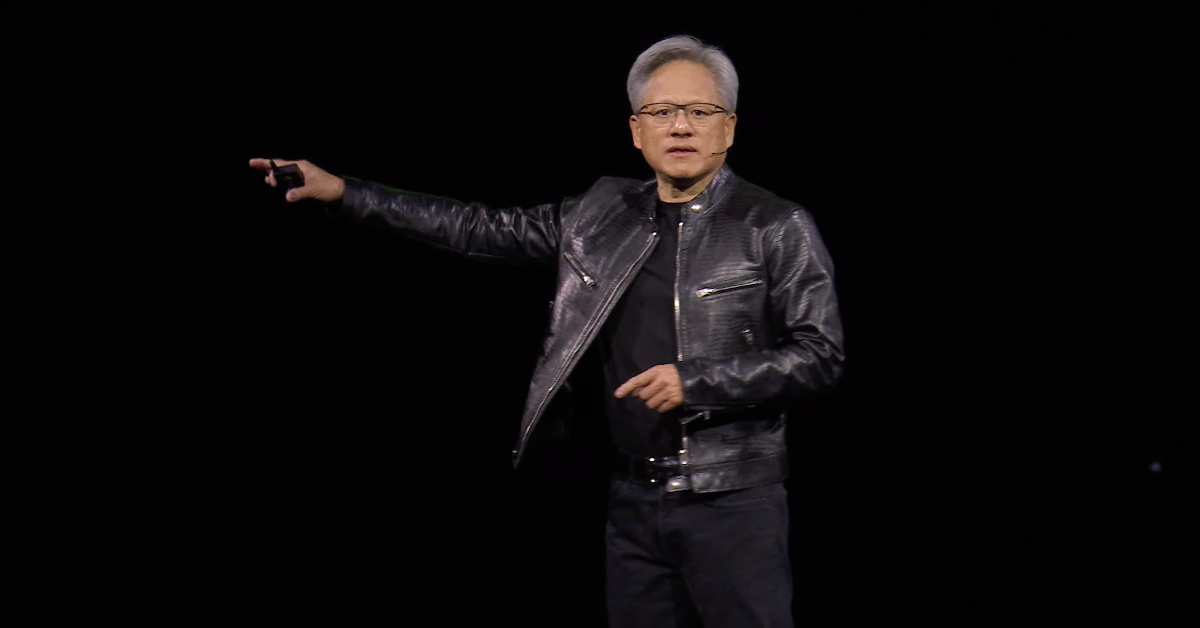

Decades of science fiction have, however, ensured that the public image of robots hasn’t strayed too far from its origins. For many, the humanoid form is still the platonic robot ideal — it’s just that the state of technology hasn’t caught up to that vision. Earlier this week, Nvidia held its own on-stage robot parade at its GTC developer conference, as CEO Jensen Huang was flanked by images of a half-dozen humanoids.

While the notion of the concept of the general-purpose humanoid has, in essence, been around longer than the word “robot,” until recently, the realization of the concept has seemed wholly out of grasp. We’re very much not there yet, but for the first time, the concept has appeared over the horizon.

What is a “general-purpose humanoid?”

Image Credits: Nvidia

Before we dive any deeper, let’s get two key definitions out of the way. When we talk about “general-purpose humanoids,” the fact is that both terms mean different things to different people. In conversations, most people take a Justice Potter “I know it when I see it” approach to both in conversation.

For the sake of this article, I’m going to define a general-purpose robot as one that can quickly pick up skills and essentially do any task a human can do. One of the big sticking points here is that multi-purpose robots don’t suddenly go general-purpose overnight.

Because it’s a gradual process, it’s difficult to say precisely when a system has crossed that threshold. There’s a temptation to go down a bit of a philosophical rabbit hole with that latter bit, but for the sake of keeping this article under book length, I’m going to go ahead and move on to the other term.

I received a bit of (largely good-natured) flack when I referred to Reflex Robotics’ system as a humanoid. People pointed out the plainly obvious fact that the robot doesn’t have legs. Putting aside for a moment that not all humans have legs, I’m fine calling the system a “humanoid” or more specifically a “wheeled humanoid.” In my estimation, it resembles the human form closely enough to fit the bill.

A while back, someone at Agility took issue when I called Digit “arguably a humanoid,” suggesting that there was nothing arguable about it. What’s clear is that robot isn’t as faithful an attempt to recreate the human form as some of the competition. I will admit, however, that I may be somewhat biased having tracked the robot’s evolution from its precursor Cassie, which more closely resembled a headless ostrich (listen, we all went through an awkward period).

Another element I tend to consider is the degree to which the humanlike form is used to perform humanlike tasks. This element isn’t absolutely necessary, but it’s an important part of the spirit of humanoid robots. After all, proponents of the form factor will quickly point out the fact that we’ve built our worlds around humans, so it makes sense to build humanlike robots to work in that world.

Adaptability is another key point used to defend the deployment of bipedal humanoids. Robots have had factory jobs for decades now, and the vast majority of them are single-purpose. That is to say, they were built to do a single thing very well a lot of times. This is why automation has been so well-suited for manufacturing — there’s a lot of uniformity and repetition, particularly in the world of assembly lines.

Brownfield vs. greenfield

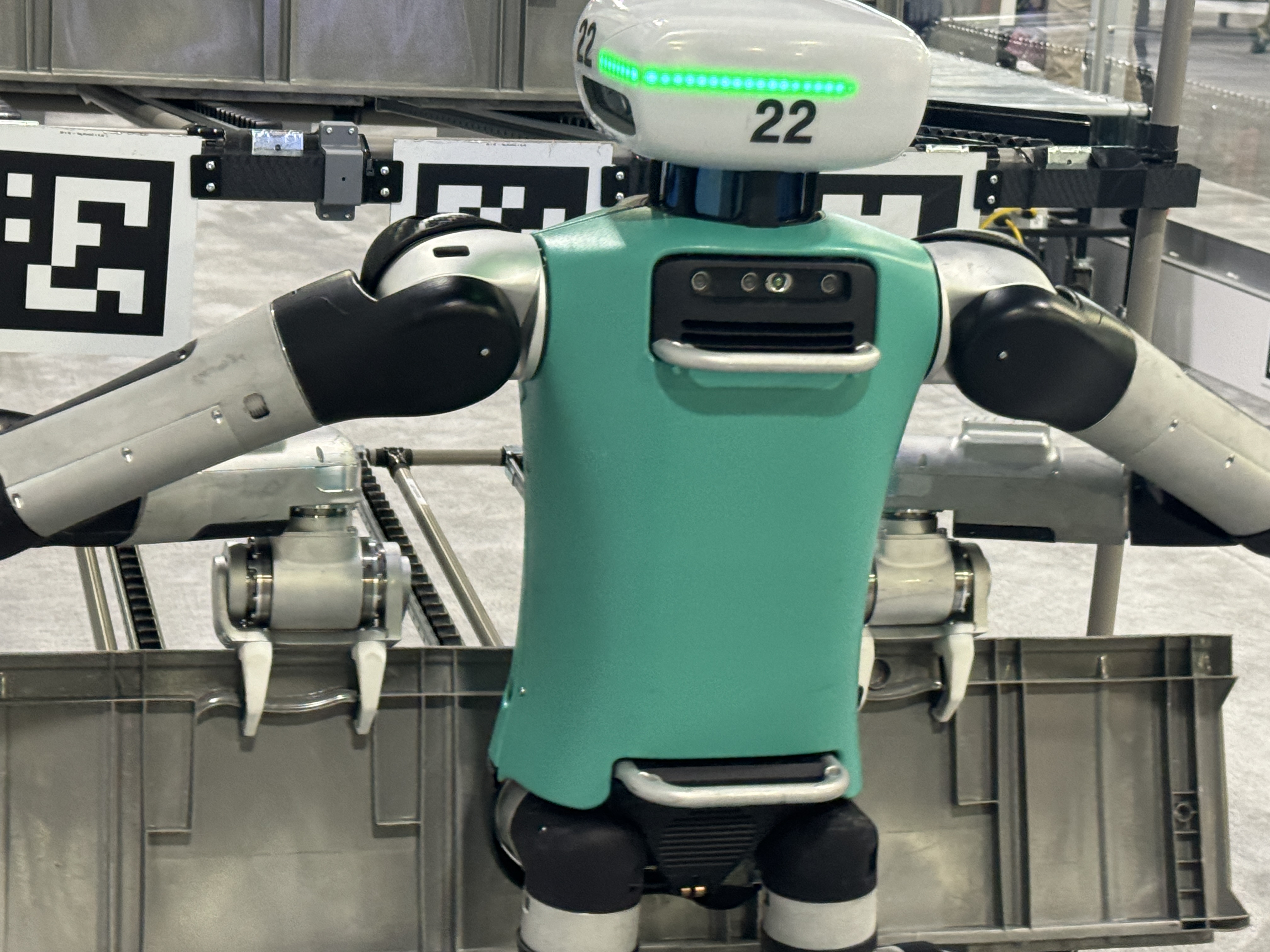

Image Credits: Brian Heater

The terms “greenfield” and “brownfield” have been in common usage for several decades across various disciplines. The former is the older of two, describing undeveloped land (quite literally, a green field). Developed to contrast the earlier term, brownfield refers to development on existing sites. In the world of warehouses, it’s the difference between building something from scratch or working with something that’s already there.

There are pros and cons of both. Brownfields are generally more time and cost-effective, as they don’t require starting from scratch, while greenfields afford to opportunity to built a site entirely to spec. Given infinite resources, most corporations will opt for a greenfield. Imagine the performance of a space built ground-up with automated systems in mind. That’s a pipedream for most organizers, so when it comes time to automate, a majority of companies seek out brownfield solutions — doubly so when they’re first dipping their toes into the robotic waters.

Given that most warehouses are brownfield, it ought come as no surprise that the same can be said for the robots designed for these spaces. Humanoids fit neatly into this category — in fact, in a number of respects, they are among the brownest of brownfield solutions. This gets back to the earlier point about building humanoid robots for their environments. You can safely assume that most brownfield factories were designed with human workers in mind. That often comes with elements like stairs, which present an obstacle for wheeled robots. How large that obstacle ultimately is depends on a lot of factors, including layout and workflow.

Baby steps

Image Credits: Figure

Call me a wet blanket, but I’m a big fan of setting realistic expectations. I’ve been doing this job for a long time and have survived my share of hype cycles. There’s an extent to which they can be useful, in terms of building investor and customer interest, but it’s entirely too easy to fall prey to overpromises. This includes both stated promises around future functionality and demo videos.

I wrote about the latter last month in a post cheekily titled, “How to fake a robotics demo for fun and profit.” There are a number of ways to do this, including hidden teleoperation and creative editing. I’ve heard whispers that some firms are speeding up videos, without disclosing the information. In fact, that’s the origin of humanoid firm 1X’s name — all of their demos are run in 1X speed.

Most in the space agree that disclosure is important — even necessary — on such products, but there aren’t strict standards in place. One could argue that you’re wading into a legal gray area if such videos play a role in convincing investors to plunk down large sums of money. At the very least, they set wildly unrealistic expectations among the public — particularly those who are inclined to take truth-stretching executives’ words as gospel.

That can only serve to harm those who are putting in the hard work while operating in reality with the rest of us. It’s easy to see how hope quickly diminishes when systems fail to live up to those expectations.

The timeline to real-world deployment contains two primary constraints. The first is mechatronic: i.e. what the hardware is capable of. The second is software and artificial intelligence. Without getting into a philosophical debate around what qualifies as artificial general intelligence (AGI) in robots, one thing we can certainly say is that progress has — and will continue to be gradual.

As Huang noted at GTC the other week, “If we specified AGI to be something very specific, a set of tests where a software program can do very well — or maybe 8% better than most people — I believe we will get there within five years.” That’s on the optimistic end of the timeline I’ve heard from most experts in the field. A range of five to 10 years seems common.

Before hitting anything resembling AGI, humanoids will start as single-purpose systems, much like their more traditional counterparts. Pilots are designed to prove out that these systems can do one thing well at scale before moving onto the next. Most people are looking at tote moving for that lowest-hanging fruit. Of course, your average Kiva/Locus AMR can move totes around all day, but those systems lack the mobile manipulators required to move payloads on and off themselves. That’s where robot arms and end effectors come in, whether or not they happen to be attached to something that looks human.

Speaking to me the other week at the Modex show in Atlanta, Dexterity founding engineer Robert Sun floated an interesting point: humanoids could provide a clever stopgap on the way to lights out (fully automated) warehouses and factories. Once full automation is in place, you won’t necessarily require the flexibility of a humanoid. But can we reasonably expect these systems to be fully operational in time?

“Transitioning all logistics and warehousing work to roboticized work, I thought humanoids could be a good transition point,” Sun said. “Now we don’t have the human, so we’ll put the humanoid there. Eventually, we’ll move to this automated lights-out factory. Then the issue of humanoids being very difficult makes it hard to put them in the transition period.”

Take me to the pilot

Image Credits: Apptronik/Mercedes

The current state of humanoid robotics can be summed up in one word: pilot. It’s an important milestone, but one that doesn’t necessarily tell us everything. Pilot announcements arrive as press releases announcing the early stage of a potential partnership. Both parties love them.

For the startup, they represent real, provable interest. For the big corporation, they signal to shareholders that the firm is engaging with the state of the art. Rarely, however, are real figures mentioned. Those generally enter the picture when we start discussing purchase orders (and even then, often not).

The past year has seen a number of these announced. BMW is working with Figure, while Mercedes has enlisted Apptronik. Once again, Agility has a head start on the rest, having completed its pilots with Amazon — we are, however, still waiting for word on the next step. It’s particularly telling that — in spite of the long-term promise of general-purpose systems, just about everyone in the space is beginning with the same basic functionality.

Two legs to stand on

Image Credits: Brian Heater

At this point, the clearest path to AGI should look familiar to anyone with a smartphone. Boston Dynamics’ Spot deployment provides a clear real-world example of how the app store model can work with industrial robots. While there’s a lot of compelling work being done in the world of robot learning, we’re a ways off from systems that can figure out new tasks and correct mistakes on the fly at scale. If only robotics manufacturers could leverage third-party developers in a manner similar to phonemakers.

Interest in the category has increased substantially in recent months, but speaking personally, the needle hasn’t moved too much in either direction for me since late last year. We’ve seen some absolutely killer demos, and generative AI presents a promising future. OpenAI is certainly hedging its bets, first investing in 1X and — more recently — Figure.

A lot of smart people have faith in the form factor and plenty of others remain skeptical. One thing I’m confident saying, however, is that whether or not future factories will be populated with humanoid robots on a meaningful scale, all of this work will amount to something. Even the most skeptical roboticists I’ve spoken to on the subject have pointed to the NASA model, where the race to land humans on the mood led to the invention of products we use on Earth to this day.

We’re going to see continued breakthroughs in robotic learning, mobile manipulation and locomotion (among others) that will impact the role automation plays in our daily life one way or another.

[ad_2]

Source link